README

Hello internet surfers! The goal of this post is to set up GoAccess for server side reporting of our website visitors (and other stuff eventually). Here’s the program description from their website:

GoAccess is an open source real-time web log analyzer and interactive viewer that runs in a terminal in *nix systems or through your browser. It provides fast and valuable http statistics for system administrators that require a visual server report on the fly.

The plan is to start with the vanilla (default) configuration and slowly tweak settings to end up with a lean and accurate reporting system for our site. I’ll publish new parts as time allows. My server information is:

- Debian 12.8

- Apache 2.4.62

- GoAccess 1.7 (from Debian repository)

Why Are We Doing This?

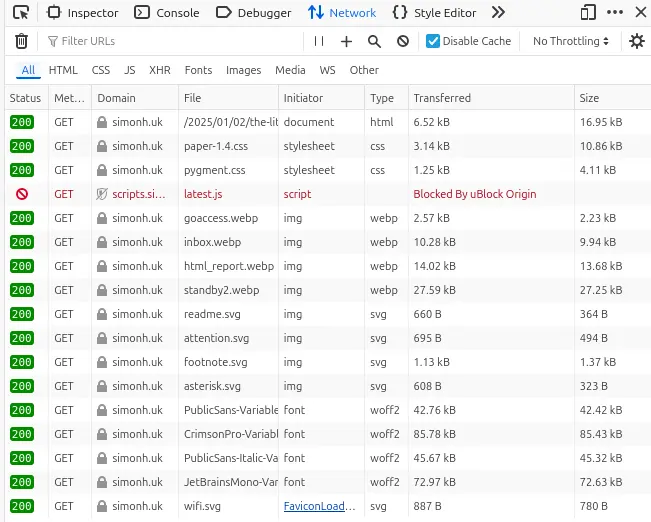

Client-side javascript analytics scripts have a big drawback. Look at the screenshot below:

See the problem? Simple Analytics, which I’ve been trying out, is massively under reporting my visitors. That might have something to do with over 900 million people (including me) are using ad blockers! Luckily we have access to our server logs, and that’s going to give us far more accurate statistics. Let’s dive in!

We’ll start by dealing with crawlers.

Part 1 – Crawlers

simon@server:~ [ssh] $ cd /etc/goaccess/simon@server:/etc/goaccess [ssh] $ ll

total 48K

drwxr-xr-x 2 root root 4.0K Aug 20 2023 .

drwxr-xr-x 119 root root 12K Jan 2 16:06 ..

-rw-r--r-- 1 root root 3.3K Mar 1 2021 browsers.list

-rw-r--r-- 1 root root 21K Jan 4 2023 goaccess.conf

-rw-r--r-- 1 root root 1.7K Mar 1 2021 podcast.listLet’s become root, so we don’t have to bother typing sudo all the time

simon@server:/etc/goaccess [ssh] $ sudo -s

[sudo] password for simon:

root@server:/etc/goaccess#Let’s find out how many lines are in goaccess.conf as generally the more lines, the more options we can fiddle with

root@server:/etc/goaccess# cat goaccess.conf | wc -l

757757 lines, so quite a few. As always, I’ll keep track of changes I make to the file with GNU RCS. I’ve written a previous post about using RCS for managing configuration files, available here

root@server:/etc/goaccess# mkdir RCS

root@server:/etc/goaccess# ci -l goaccess.conf

RCS/goaccess.conf,v <-- goaccess.conf

enter description, terminated with single '.' or end of file:

NOTE: This is NOT the log message!

>> Initial version

>> .

initial revision: 1.1

done

root@server:/etc/goaccess#Right, now we’ve safely got the unedited file checked in, let’s run goaccess with the default configuration. All we’re interested in today is how many visitors this site has had today. Running the command below will output a few screenfuls of information. I want section 9 — Virtual Hosts1

root@server:/etc/goaccess# goaccess /var/log/apache2/other_vhosts_access.log -c

9 - Virtual Hosts

Hits h% Vis. v% Tx. Amount Data

---- ------ ---- ------ ---------- ----

847 46.93% 172 69.92% 13.55 MiB www.simonh.ukSo, we’re told I’ve had 172 visitors today. Good luck getting more than that, Google!2 But, before I officially announce this as the most popular site on the whole internet, I better double check my numbers…

Open /etc/goaccess/goaccess.conf with your editor of choice, and find the following section:

# Ignore crawlers from being counted.

# This will ignore robots listed under browsers.c

# Note that it will count them towards the total

# number of requests, but excluded from any of the panels.

#

ignore-crawlers true

# Parse and display crawlers only.

# This will ignore all hosts except robots listed under browsers.c

# Note that it will count them towards the total

# number of requests, but excluded from any of the panels.

#

crawlers-only false

# Unknown browsers and OS are considered as crawlers

#

unknowns-as-crawlers trueI’m going to first change ignore-crawlers from false to true, and then checkin the change.

root@server:/etc/goaccess# ci -l goaccess.conf

RCS/goaccess.conf,v <-- goaccess.conf

new revision: 1.2; previous revision: 1.1

enter log message, terminated with single '.' or end of file:

>> Ignore-crawlers = true

>> .

doneNow we’ll run goaccess again and see how that change has affected our visitors

root@server:/etc/goaccess# goaccess /var/log/apache2/other_vhosts_access.log -c

9 - Virtual Hosts

Hits h% Vis. v% Tx. Amount Data

---- ------ ---- ------ ---------- ----

636 41.79% 90 69.23% 7.37 MiB www.simonh.ukSo by just ignoring crawlers, my visitors have been nearly halved. I don’t know whether to be happy or sad about that. But lets keep going. The next obvious thing to do is to set that visitors that are using unknown browsers and OS’s are also probably crawlers (makes sense). So let’s throw them away as well! And checkin our change

root@server:/etc/goaccess# ci -l goaccess.conf

RCS/goaccess.conf,v <-- goaccess.conf

new revision: 1.3; previous revision: 1.2

enter log message, terminated with single '.' or end of file:

>> unknowns-as-crawlers = true

>> .

doneLet’s run goaccess yet again to see if I’ve lost any more visitors

9 - Virtual Hosts

Hits h% Vis. v% Tx. Amount Data

---- ------ ---- ------ ---------- ----

550 78.57% 58 68.24% 6.36 MiB www.simonh.ukSo by changing just those two options from false to true, I’ve wiped out another third of my visitors! You’ll have to bear with me while I ascend a mountain to contemplate whether ignorance is bliss, or, the truth shall set you free.

See you in the next part…

Part 2 – Emailing the Report

Turns out there are no mountains near me (plus it’s cold) so I’ll continue. I wrote a post back in 2021 about getting a daily email of visitors using goaccess, which I’ll slightly modify here.

Here is the script we’ll use which we’ll call apache_report:

#!/bin/bash

now=`date +%Y-%m-%d_%H%M%S`

goaccess /var/log/apache2/other_vhosts_access.log --log-format=VCOMBINED -o report_$now.html

gzip report_$now.html

echo "Report" | mail -s "Apache Report - $now" -A report_$now.html.gz <you@youremail.address>Remember to change you@youremail.address to your email address!

If you haven’t got round to setting up a mail server yet, you can follow my tutorial for OpenSMTPD, or find one elsewhere on the net.

First thing to do is save apache_report on the server and check it works.

simon@server:~/tmp [ssh] $ nano apache_report Copy the script above and paste it into your editor. With that saved, let’s run it.

simon@server:~/tmp [ssh] $ sudo bash apache_report

[PARSING /var/log/apache2/other_vhosts_access.log] {256} @ {0/s}

Cleaning up resources...

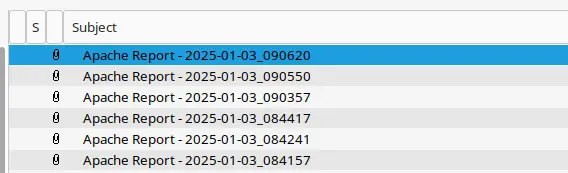

simon@server:~/tmp [ssh] $ No errors. Good. Let’s check our email:

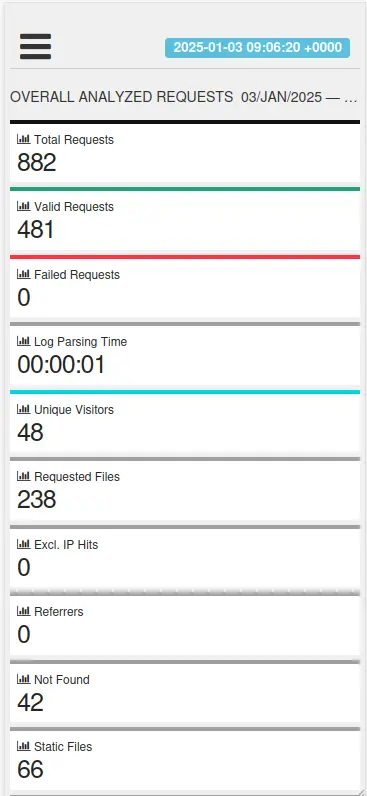

I’ve sent a few to myself, as you can see. Below is how the email looks on mobile. I’ve formatted it like this to make it easier to view here on this page:

Awesome! The final thing to do in part 2, is set up a cron job so we’ll get a daily email with our stats.

simon@server:~/tmp [ssh] $ sudo cp apache_report /etc/cron.daily/

[sudo] password for simon:

simon@server:~/tmp [ssh] $ ls /etc/cron.daily/

00logwatch apt-compat dpkg mod-pagespeed

apache2 aptitude logrotate ntp

apache_report bsdmainutils.dpkg-remove man-db popularity-contestMake our file executable

simon@server:~/tmp [ssh] $ sudo chmod+x /etc/cron.daily/apache_reportOne thing to warn you about: each email is currently 148kB in size (gzipped). Extracted they are coming out at about 450KB. I know we aren’t in 1980 anymore, but make sure you’ve got plenty of storage with your mail provider!

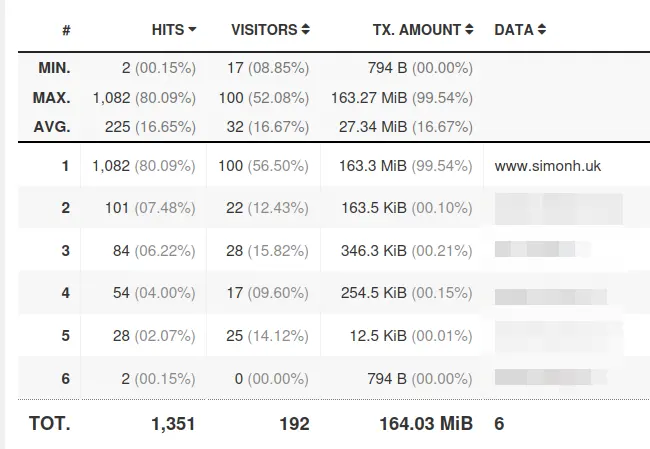

The next day @ 06:25:55 my report arrives in my inbox (table below is the vhosts section):

Part 3 – ?

Footnotes

1 Note to self: trim all that trailing whitespace with sed -i 's/[[:space:]]*$//' <file>

2 I’m informed that Google shares numbers in the millions or billions. Maybe trillions. Still, 172 should put me in the top 10 I expect.

Updates

2024-01-04: Add why are we doing this? section to part 1

2024-01-03: Add Part 2